About Human-aware Embodied AI (HEAI) | IROS 2025 Workshop

Embodied AI, i.e., the development of technologies capable of interacting with their physical environment, witnessed substantial progress in the last years, mainly thanks to the advent of Large Language Models (LLMs), Multimodal Large Language Models (MLLMs), and other large foundation AI approaches. These breakthroughs have enabled human users to interact with embodied agents (e.g., robots) through natural language, instructing an embodied agent to navigate to a given position, searching for a specific object, or interacting with the object to perform a certain manipulation.

However, while being inherently human-centric, these

problems are often addressed without considering the presence of

human users and their interaction with the agent throughout the

execution of the embodied task. Considering that the long-term

goal of Embodied AI is to create a Co-Habitat for humans and

agents, Bi-directional communication (i.e.,

human-to-agent and agent-to-human) emerges as a key challenge

that must be addressed to effectively resolve uncertainty during

the full cycle of robotic perception, reasoning, planning,

action, and verification.

However, the problem of creating an intuitive, effective, trustworthy, and robust human-agent Co-Habitat remains largely unexplored.

This workshop will address the challenges of creating

seamless human-agent Co-Habitats, focusing on

trustworthiness (when agents don't know), robustness

(when agents ask for help), and intuitive collaboration

(bi-directional

interaction). We aim to explore methods for integrating MLLMs

into embodied agents for effective multimodal interactions,

identifying and mitigating the risks of model hallucinations,

and modeling human error and communication patterns.

Schedule

Tentative schedule as follows:

| Time | Talk | Comments |

|---|---|---|

| 13:00 - 13:10 | Welcome & Opening Remarkss | |

| 13:10 - 13:40 | Roozbeh Mottaghi | Leveraging Human Experience in Robotics |

| 13:40 - 14:10 | He Wang | Generalizable and Functional Dexterous Grasping and Manipulation via Sim2Real |

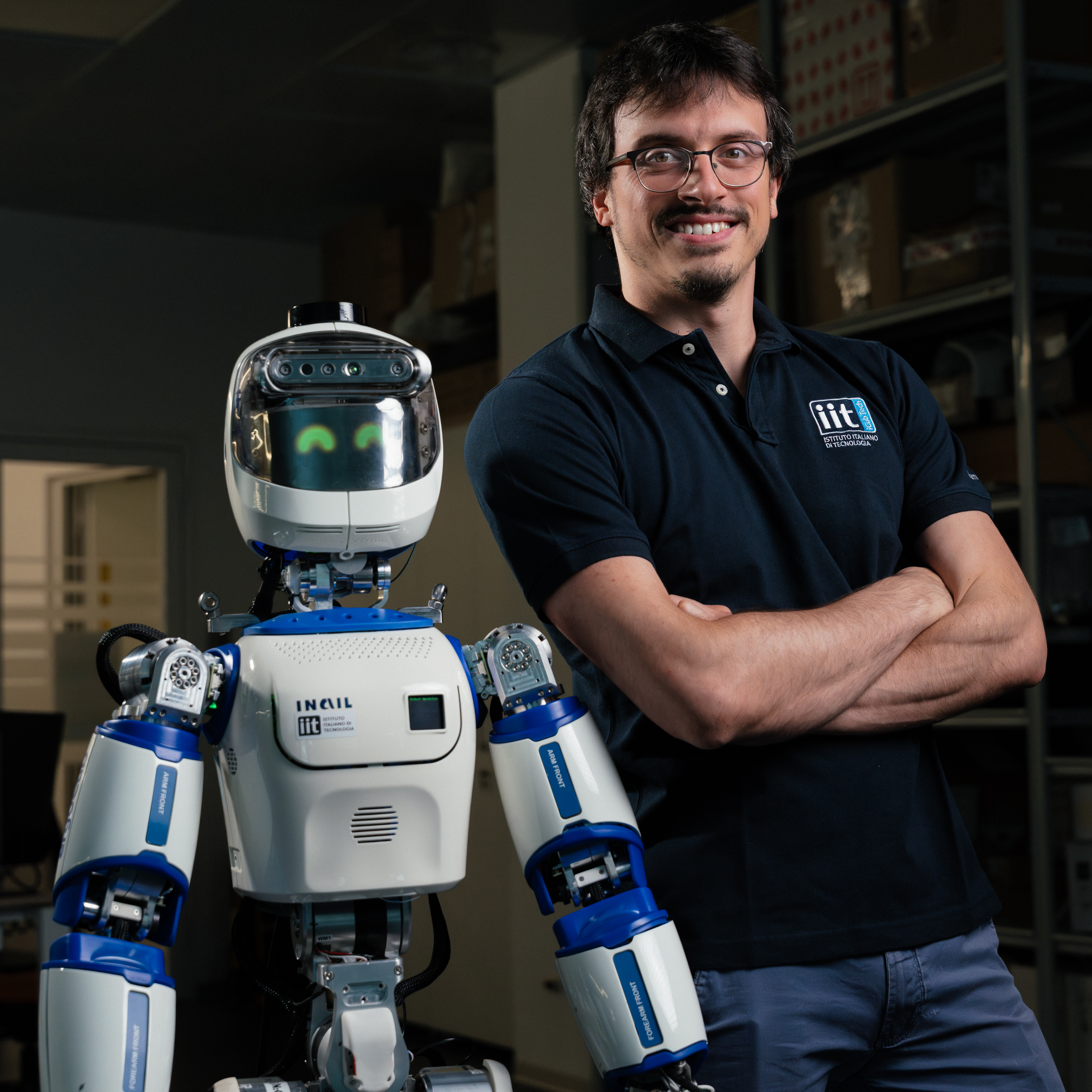

| 14:10 - 14:40 | Stefano Dafarra | ergoCub: the Embodied AI human aware humanoid |

| 14:40 - 15:30 | Coffee Break, Poster session & AMA (Ask Me Anything) panel | |

| 15:30 - 15:55 | Best posters presentation | 8 minutes each + 3 min Q&A |

| 15:55 - 16:25 | Angelica Lim | Humans Aren't Robots: Expressive, Multimodal Human Simulation for Robotics |

| 16:25 - 16:55 | Gaofeng Li | Agile Telerobotic Systems: Embodied and Intelligent Robotic Manipulation Learning with Human-in-the-Loop |

| 16:55 - 17:00 | Closing Remarks | |

Call for Contributions

We call for papers covering relevant topics, including but not limited to:

-

- ▪ Collaborative Human-Agent interaction

- ▪ Multimodal Human-Agent interaction

- ▪ Interaction-enabled simulators / benchmarks

- ▪ Interaction personalization

- ▪ Interaction adaptation

-

- ▪ Uncertainty understanding

- ▪ Foundation models (VLM/VLAs)

- ▪Human behaviour modeling

- ▪ Embodied reasoning

- ▪ Ethics & Safety

Submission Guidelines

We invite submissions of up to

4 pages (excluding references) of non-archival papers using

the official

template. Submissions previously published elsewhere are also welcome.

To submit a paper, please see the official

OpenReview page.

Important

Dates

-

📅 Submission opens:

June 15th, 2025

-

⏳ Submission deadline:

September 26th, 2025

-

✅ Acceptance notification:

October 08th, 2025

Important Dates

- 📅 Submission opens: June 15th, 2025

- ⏳ Submission deadline: September 26th, 2025

- ✅ Acceptance notification: October 08th, 2025

NEWS! The authors of the two best papers will have their workshop registration covered. In addition, travel support is available.

Further details about the Poster Presentation will be shared soon.

Organizing Committee

Francesco Taioli

Yiming Wang

Xinyuan Qian

Jacob Krantz

Angel X Chang

Alessandro Vinciarelli

Alessio Del Bue

https://www.iit.it/it/people-details/-/people/alessio-delbue

Marco Cristani